Response process includes information about whether the actions or thoughts of the subjects actually match the test and also information regarding training for the raters/observers, instructions for the test-takers, instructions for scoring, and clarity of these materials.

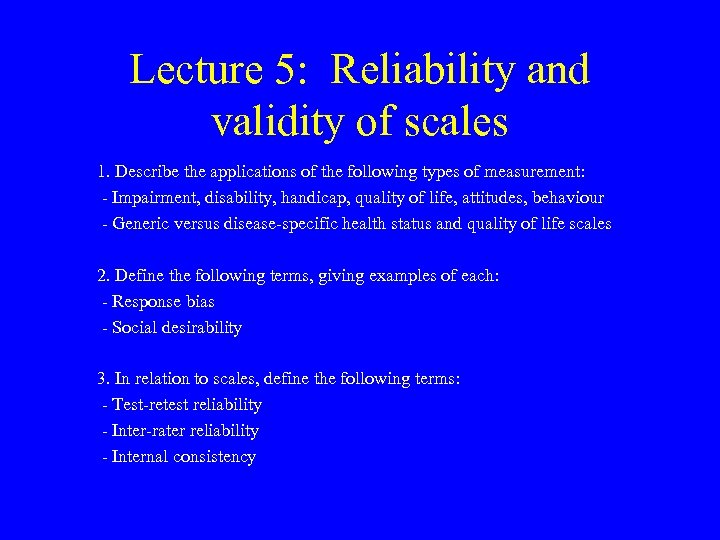

Provide information such as who created the instrument (national experts would confer greater validity than local experts, who in turn would have more validity than nonexperts) and other steps that support the instrument has the appropriate content. Evidence can be found in content, response process, relationships to other variables, and consequences.Ĭontent includes a description of the steps used to develop the instrument. Evidence can be assembled to support, or not support, a specific use of the assessment tool. , 9,10 Determining validity can be viewed as constructing an evidence-based argument regarding how well a tool measures what it is supposed to do. Validity of assessment instruments requires several sources of evidence to build the case that the instrument measures what it is supposed to measure. How is the validity of an assessment instrument determined? 4 – 7, 8 This model looks at the overall reliability of the results. Interrater reliability is used to study the effect of different raters or observers using the same tool and is generally estimated by percent agreement, kappa (for binary outcomes), or Kendall tau.Īnother method uses analysis of variance (ANOVA) to generate a generalizability coefficient, to quantify how much measurement error can be attributed to each potential factor, such as different test items, subjects, raters, dates of administration, and so forth. Administer the assessment instrument at 2 separate times for each subject and calculate the correlation between the 2 different measurements. To perform a test/retest, you must be able to minimize or eliminate any change (ie, learning) in the condition you are measuring, between the 2 measurement times. Test/retest is a more conservative estimate of reliability than Cronbach alpha, but it takes at least 2 administrations of the tool, whereas Cronbach alpha can be calculated after a single administration. 5 Cronbach alpha calculates correlation among all the variables, in every combination a high reliability estimate should be as close to 1 as possible.įor test/retest the test should give the same results each time, assuming there are no interval changes in what you are measuring, and they are often measured as correlation, with Pearson r. That is, the correlation among the answers is measured.Ĭronbach alpha is a test of internal consistency and frequently used to calculate the correlation values among the answers on your assessment tool. Sometimes reliability is referred to as internal validity or internal structure of the assessment tool.įor internal consistency 2 to 3 questions or items are created that measure the same concept, and the difference among the answers is calculated.

Reliability can be estimated in several ways the method will depend upon the type of assessment instrument.

Using an instrument with high reliability is not sufficient other measures of validity are needed to establish the credibility of your study. Examples of assessments include resident feedback survey, course evaluation, written test, clinical simulation observer ratings, needs assessment survey, and teacher evaluation. Thus, reliability and validity must be examined and reported, or references cited, for each assessment instrument used to measure study outcomes. Validity is not a property of the tool itself, but rather of the interpretation or specific purpose of the assessment tool with particular settings and learners.Īssessment instruments must be both reliable and valid for study results to be credible.

Here validity refers to how well the assessment tool actually measures the underlying outcome of interest. For outcome measures such as surveys or tests, validity refers to the accuracy of measurement. Validity in research refers to how accurately a study answers the study question or the strength of the study conclusions.

0 kommentar(er)

0 kommentar(er)